Imagine being able to dream up a picture, describe it to a computer, and have it delivered to you within seconds as a .png file. Believe it or not, you and I can do that today. We just need some help from a pal called DALL-E 2.

It’s surreal

You might have already guessed that this has something to do with artificial intelligence (AI). A wave of new apps, based upon ‘generative’ artificial intelligence models, can create images based on text descriptions entered by a user. Computer scientists call this phenomenon ‘hallucination’. I recently began experimenting with DALL-E 2, one such application released for public use in beta version. In this article, I share examples of the system in action as well as provide a gentle introduction to the basics of artificial intelligence for those unfamiliar with this branch of computer science.

DALL-E is a play on the name of the Pixar movie robot WALL-E, tweaked to sound like the surname of the surrealist artist Salvador Dali. DALL-E 2 is the latest version.

I put DALL-E 2 through its paces by entering brief descriptions of photographs in my collection, a number of which have been featured in recent Macfilos articles. I also entered descriptions of novel images I hoped the system would generate, inspired in some cases by the work of renowned photographers associated with Macfilos. In each case, DALL-E 2 generated four remarkable images within twenty seconds of typing in a text string and hitting return. I have included a number of them throughout the article. I am intrigued to hear what you make of them.

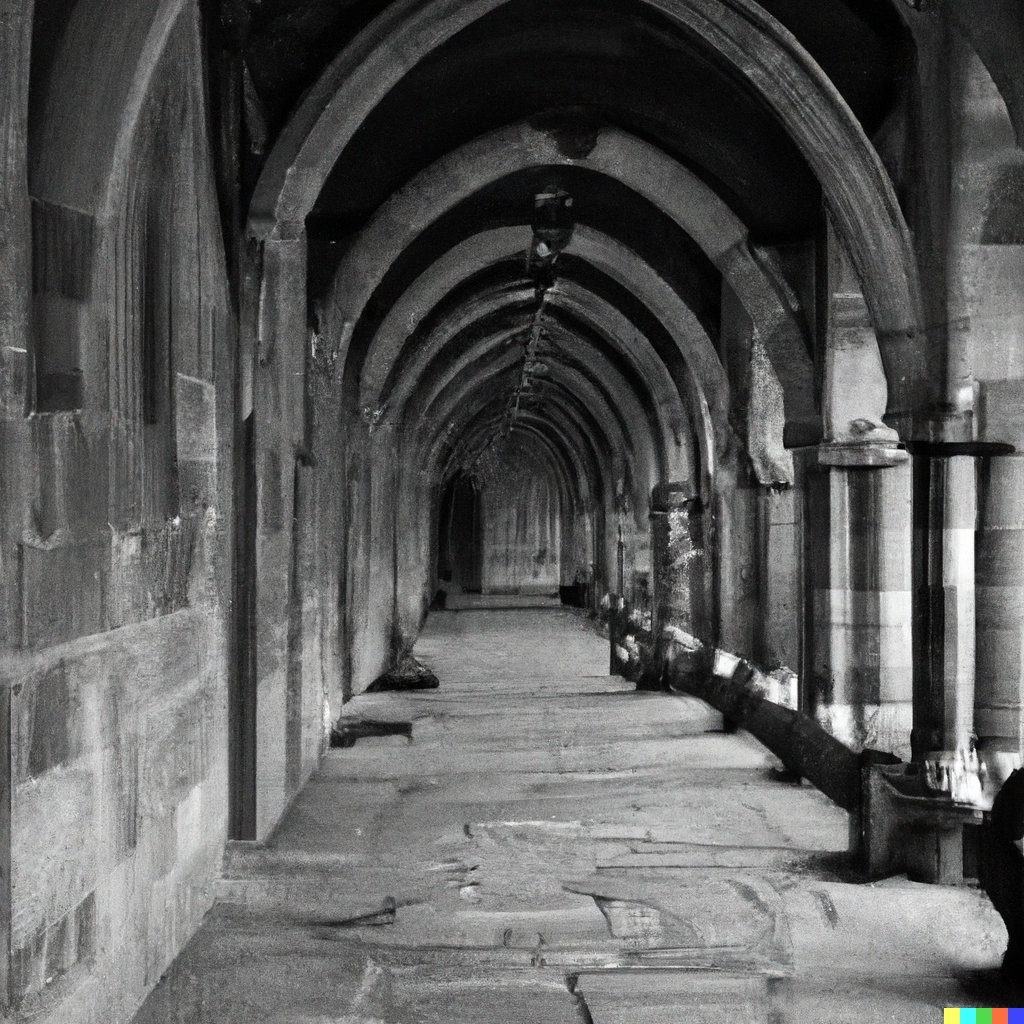

To whet your appetite, here is a matched pair: on the left, my photograph; on the right, the image DALL-E 2 generated in response to the description: ‘A view down a long, medieval colonnade towards a distant vanishing point in black and white’.

Just to be clear, that computer-hallucinated image did not exist previously and was not patched together from existing images. Vast amounts of data and enormous computing power have enabled DALL-E 2’s underlying algorithm to learn the relationship between images and words that describe them. Pixels in the image were arranged by that algorithm to yield an optimal representation of the text string based on the text ↔ image dataset used in its training.

The intelligent machine

DALL-E 2 is a powerful example of how our lives are being transformed through artificial intelligence. If you have an iPhone which opens when you look at it, you are enjoying the facial recognition abilities of AI. If you use Google Translate to convert French prose into English, you are enjoying its natural language processing abilities. If you instruct your Amazon Echo to tell you today’s weather forecast, you are enjoying its voice recognition abilities.

Artificial intelligence is already reshaping photography: enabling automatic focusing on human faces; generating artificial bokeh in iPhone portrait mode; organising our photographs according to people categories; and selecting photographs we might like on our Instagram feed. DALL-E 2 and other generative AI systems represent a further step in this trend.

Here is its interpretation of the phrase I used to describe another of my photographs (on the left): ‘An abstract image from a brightly coloured building with deep shadows’

Machine learning (ML) is the branch of AI enabling these feats. Machine learning algorithms develop capabilities by learning from information supplied to them rather than through explicit programming. They learn iteratively as more data is supplied, generating models with more and more predictive power. Shown a chest X-ray, a suitably trained machine learning model can diagnose the presence of disease with high accuracy and, in some cases, more accurately than human pathologists.

Machine learning systems employ neural networks, computational frameworks inspired by the human brain and its billions of neural connections. A basic neural network comprises an input layer of tens or hundreds of ‘neurons’, a ‘hidden’ layer of connected neurons, and an output layer. Deep learning (DL) systems are even more sophisticated, employing multiple hidden layers. Together with massively parallel cloud computing resources comprising hundreds of thousands of graphic processing units (GPUs), mind-bogglingly powerful models can be built.

The next photo pair provides an especially convincing example of the power of DALL-E 2. I recently described my experience of photographing the work of Catalan architect Antoni Gaudi and his use of trencadis — multi-coloured, broken-tile mosaics — to cover exterior surfaces. The challenge I posed DALL-E 2 was to interpret the description: ‘Looking up at a wall covered in tiles in different shades of blue in the style of Antoni Gaudi’.

The adjective ‘amazing’ is overused to the point of banality, but I confess, it’s what I uttered aloud when I saw DALL-E 2’s creation.

Macfilos meets DALL-E 2

Lest anyone suspect that DALL-E 2’s performance thus far benefitted from some sort of computational skullduggery, exploiting privileged access to images on my device, I also tried describing other people’s photos. What better place to start than with the efforts of Jonathan Slack? Aware of his penchant for testing out camera gear while on vacation in Crete, often looking down at picturesque inlets, DALL-E 2 and I conjured up some shots of our own, using the description: ‘Looking down on a beach in Crete with tall cliffs on the left in the style of Jonathan Slack’.

What do you make of it Jono?

Given his prolific contributions to Macfilos, I could not leave out Jörg-Peter Rau, so I typed in: ‘Street photography in a German city in summertime in the style of Joerg-Peter Rau’.

Ah well, it seems DALL-E 2 was going for a more impressionistic rendering in this case, with over-exposure thrown in for good measure. Perhaps DALL-E 2 should read Joerg-Peter’s series on light meters.

My final effort in this genre was inspired by a photograph on Thorsten Overgaard’s website, not one he had taken but one I thought I could describe accurately to my algorithmic partner in crime: ‘Man in a bowler hat and dark suit kissing a blonde woman in a dark dress standing on the roof of a yellow taxi in a traffic jam of yellow taxis’.

A commendable effort, I would say. Perhaps adding ‘telephoto shot’ to the input phrase would have yielded an even closer reproduction, but at some point, I would run into the character limit of the free text field.

Adult supervision

Machine learning models are built through either ‘supervised’ or ‘unsupervised’ learning. Supervised learning uses data which has been labelled. In a typical case, the system is informed that these are photos of cats and those are photos of dogs. The algorithm learns to recognise cats versus dogs and classify a new image as one or the other.

Unsupervised learning involves supplying the system with a large body of unstructured data, for example, images. The system determines which features of the images are important in assigning them to specific regions within a multi-dimensional virtual space; images of wheeled vehicles would be assigned to one domain, and images of creatures with legs to another. Within the wheeled-vehicle domain, images of tractors would be in a region distinct from images of automobiles and so on.

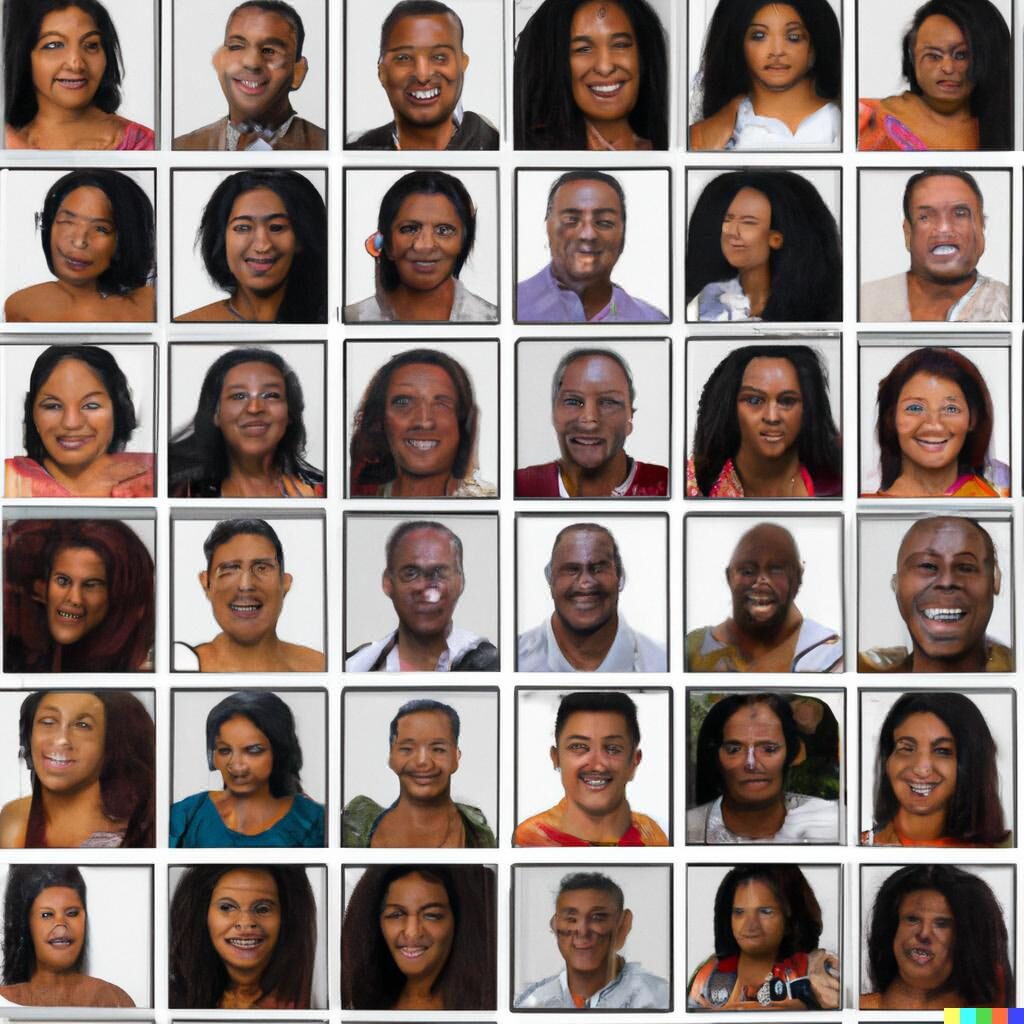

A simple example of a deep learning model built through this unsupervised approach would be the classification of people’s faces. The algorithm would group photographs of women in a different region of multi-dimensional virtual space from photographs of men. The system has not been informed that there are such things as men and women. Still, based on the arrangement of pixels in the photographs, it learns that there are generally two distinct kinds of images within the data.

This was my attempt to recruit DALL-E 2’s help in illustrating this point. I plugged in: ‘Random photographs of diverse men and women in a six by six grid with equal numbers of men and women’. Not one of its finest moments.

One of the most impressive recent accomplishments in the deep learning field is AlphaFold. Built by DeepMind Technologies, this computational model can predict the structure of all soluble human proteins with accuracy comparable to experimental-based approaches such as X-ray crystallography. I digress briefly here to wave the flag and point out that DeepMind is a British AI division of Alphabet, the parent company of Google, based in London. Some might view the UK as having lost its way politically and economically in recent years, but in the field of artificial intelligence, the Brits are world-class!

Imagination unleashed

As fun as it was to see how DALL-E 2 interpreted descriptions of photographs as hallucinated images, it was even more fun exploring how it would interpret descriptions dreamed up from scratch. Here’s what DALL-E 2 made of: ‘Sunlit mountain peak covered in snow surrounded by swirling storm clouds”.

The snow-capped mountain image at the beginning of the article was also a hallucination by DALL-E 2 in response to this prompt. Readers familiar with 1960s rock music will know the origins of the pseudonym to whom the photo was credited.

And here’s its response to: ‘Parched desert floor with close up of mud tiles mountains in the distance’

To conclude, here’s a limited edition suggestion for the wizards of Wetzlar: ‘a red Leica camera with a black Leica logo viewed at 45 degrees’.

Apart from the fact that we are viewing it straight on rather than at forty-five degrees, I think this is a splendid interpretation and definitely a model I would purchase.

DALL-E 2 dalliance

Do I think generative AI systems like DALL-E 2 will render photography obsolete? No.

Do I think they will have a significant impact on the creative arts? Yes.

While my exploration of the application was motivated by curiosity and a fascination with machine learning, it is already being used in earnest by content creators as part of their workflow. Rather than spend hours sketching out images to illustrate a design proposal or paying an artist to do it for them, a professional in the field can use DALL-E 2 to generate perfectly usable ones.

I expect that generative artificial intelligence systems will only improve with time and access to more data and yet more computing power. I should also point out that they are not confined to the graphic arts. So-called ‘large language models’ such as GPT-3 are capable of writing a narrative based upon a supplied prompt. There is an entirely different story to be told about the capabilities of GPT-3, but it would feature no photographs and so is not for Macfilos. Then again, I wonder if it could compose the text of my next article…

I will leave you with a final DALL-E 2 interpretation: ‘I love digital photography’.

I hope you enjoyed this account of DALL-E 2 and our brief excursion into the world of machine learning. Let me know in the comments what you made of the hallucinated images, whether you have tried out the system yourself, or if you have any (easy please!) questions about artificial intelligence or machine learning.

Read more from the author

Make a donation to help with our running costs

Did you know that Macfilos is run by five photography enthusiasts based in the UK, USA and Europe? We cover all the substantial costs of running the site, and we do not carry advertising because it spoils readers’ enjoyment. Any amount, however small, will be appreciated, and we will write to acknowledge your generosity.

Reminds me of taking my grandson to WALL-E when he was about 11. It was good to view the post apocalyptic world through a child’s eyes. Also when WALL-E fell in love with a ‘female’ robot, the soundtrack played Louis Armstrong’s version of ‘La Vie En Rose’ and how proud was I when my grandson was the only kid in the cinema to recognise Louis? Speaking of rose tinted glasses, it is easy to see the attraction of a machine that can create images, but the whole point about hobby or commercial photography, to distinguish them from technical, security or industrial photography, is to create an image which the photographer wants to have created. Now if I could imagine a scene in my heard and the machine was able to process that into an image which I wanted to create, then I would be impressed. Our smartphones are already much smarter than our cameras on the technical aspects of photography. They also allow creative effects, but as regards the artistic expression and human documentary aspects a ‘homo sapiens’ is still need in the production chain, unless, of course, the category of machine created art really takes off. Then, if you could sell the machine created art on a crypto market also controlled by machines, then the story about WALL-E being the last ‘man’ left on the planet might not seem so far-fetched.

Great fun as usual, Keith.

William

Hi William, I had not heard of WALL-E until I learned about DALL-E 2. I plan to watch it over Christmas. I am glad the article triggered a happy memory of time spent with your grandson when he was younger. Even the most imaginative amongst us would probably struggle to come up with a vision and a detailed description of an image that could match those captured with our cameras. Then again, perhaps if everyone has brain implants installed, directly coupled to generative AI systems, who knows what will be possible. Thanks for embracing the article in the tongue-in-cheek manner in which it was written! Cheers, Keith

The concept and technology is amazing , and has come a long way. However, the output has the equivalent quality of a Casio 600 x 400 pixel camera from 30 years ago.

I remember having conversations with Mike at least ten years ago regarding “lensless imaging” and how it would destroy what we know as photography.

I thought at the time that I was merely being whimsical, but Ye Gods, whimsy is becoming reality!

Anyway, give me a Rollei or Leica and an appropriate roll of film any day… and I will get on with making my pretty average snaps…

… Oh and I forgot… a rail/bus ticket to somewhere that I haven’t been to yet (these are many).

Could someone pass me a sick-bag please?

Hi Martin, it is the case that the inner workings of deep learning ML models are not visible, hence their description as black boxes. Given the season, what about asking DALL-E 2 to come up with a picture of a one-horse open sleigh on its way to Mars with Elon Musk holding the reins, in the style of Picasso? Do you think such an image exists today? I suppose we could Google it and see. 😉 Cheers! Keith

You perhaps missed my point. Of course no such image, or anything like it exists today. But pictures of sleighs, space travel, and Elon Musk do exist, and many thousands of images with such themes exist for the AI to draw on to synthesize the result it presents. In fact, you may say that a string of words like a generic Kissing Blonde might indeed be used to synthesizes a portion of a picture, if the AI has information on what “kissing” and “blonde” mean. But Elon Musk is a specific person with a specific set of facial features. I submit DALL-E 2 cannot synthesize a reasonable likeness of Elon Musk without an image of him in it’s database.

“I still find it remarkable that a machine can generate a recognizable image purely based upon its interpretation of a string of words a human used to describe that image.”

How do we know that’s actually what’s happening? As I said earlier, color me skeptical.

Hi Martin, I applaud your skepticism. Are you a scientist by any chance? 🙂 I invite you to craft a description of an image you would like to have generated, which is unlikely to exist anywhere today, and see what DALL-E 2 comes up with. Confirming or refuting hypotheses through experimentation is how the world of science determines what is true and what is fiction! Cheers, Keith

I am an engineer.

With respect, observing the input and output does not explain the process.

I am skeptical that DALL-E 2 synthesizes these images from scratch. I suggest that it has a database of images to draw upon, and then creates a new one with elements from its database..

Yes. It treats the stored images as data, just the same as the “string of words” that Keith describes being input into the system. It’s all just data, ones and zeros and then re-arranged. Like moving the furniture around a bit. You could also program the computer to randomly generate images like this. Whether you call that creative is another matter entirely.

Hi everyone! Thanks for you thoughtful comments – the general tenor of which seems to indicate that the DALL-E 2-generated images are underwhelming, and the emergence of AI-powered image generation (or AI-powered systems in general) is an unwelcome and even insignificant development!

I would not consider my exploration of DALL-E 2 either systematic or comprehensive, and from other DALL-E 2 images I have seen, it could be that replicating photos is not the best use of its capabilities; it seems more cartoonish fantasy images are more successful. Nevertheless, I still find it remarkable that a machine can generate a recognizable image purely based upon its interpretation of a string of words a human used to describe that image.

I am neither a defender or advocate of this system, but as with other machine learning-based systems, I expect it to improve steadily as more data are incorporated into its training set.

For anyone interested in trying it for themselves, here is a link. One can enter up to 50 queries for free in the first month.

https://openai.com/dall-e-2/

Cheers! Keith

I only see this mostly affecting stock photography which has already been killed off by countless amateurs giving their images away. I would find no joy or satisfaction with a computer creating the image instead of me. Photography and the other creative arts will always be around for the creatives.

By the way, you say this system was named after Wall-E and Salvador Dali. Clever idea actually, but

have you ever really looked closely at Salvador Dali’s artwork? His imagination is coupled to his physical painting skill and ability to render fine detail. The images you show here all look like poor photoshop creations to me. Jonathan Slack’s images look far and away better than the dreamed up picture reproduced here.You created a picture that looks like anyone could have taken it. I don’t think it has any of Jonathan’s DNA. How could it? It wasn’t made by him. It’s simply your idea and the software’s idea of what a Jonathan Slack photo looks like. It’s unremarkable.Same with the mountain photo. Ansel Adams or any other respectable mountain photographer, would likely not even include that in the B roll.

My life is not being “transformed by artificial intelligence”and it doesn’t need to be.

I don’t need artificial and virtual when I already have reality.

If commanded to produce an image ‘in the style of’, e.g., a well known “owner with dog(s)” photographer, the intellectual property lawyers might be rubbing their hands with glee.

I much prefer the originals as the AI ca’t get the deep shadows you have on the first image for example. In that case AI could be called absence of intelligence. I remember my students in the time of covid when most of the teaching was done through the computer kept complaining about the lack of interaction and human touch. I exactly feel the same when viewing AI images but it may be just me.

Enjoy the weekend. Here its awfully cold compared to sunny San Diego

Jean

“I much prefer the originals as the AI can’t get the deep shadows you have on the first image for example. In that case AI could be called absence of intelligence”.

I must say I also prefer blondes with a bottom half! 🧐

Interesting 🧐 Just one question: where is the other half of the kissing blond?

And the real one, I’ve eventually seen art based on that or other systems, like that first portrait auctioned in Christie’s perhaps. Well, is DALL-E 2 or similar a huge computer or a powerful software that even could run in your cellphone?

An interesting companion to this article: The Guardian’s “The inherent misogyny of AI portraits “. Although AI is supposed to be value-neutral, it has always suffered from the limitations of the data on which it’s been trained.

This is definitely ‘the most different photo-essay to wake up to’!

I’m going to over-Overgaard you: he has a series he titles ‘The Story Behind That Picture.” I feel that’s what missing here: no story in these renderings, or, perhaps, no soul. Another way to say it: if you had requested “Looking down on a beach in Crete with tall cliffs on the left” without the qualifier “in the style of Jonathan Slack’”, would you get essentially the same rendering?

Two additional thoughts:

a) All the renderings seem blurred. One could argue this will improve with technology, but I wonder. If the algorithms have classes like “window” that can be pasted into the super-ordinate class “building”, then I doubt we’ll see detailed wndows (cf the rendering’Street photography in a German city in summertime’.

b) I was comparing your Gaudi to the Gaudi-like rendering; the treatment of light, and highlights, is very different. Do you think the rendering engine uses ideas like varied light sources, or point of view with respect to light? I’m probably wrong here, but it seems several of the renderings are flat.

Right now it is a wonderful world we live in, at least with respect to products available. Just 20 years ago who could have imagined we could purchase brand-new mechanical marvels of camera construction, right alongside the latest all-digital photographic gizmos?

Wait – we can have it both ways at once! A camera that is a mechanical marvel on the outside and a digital gizmo on the inside! And can use our old legacy optics as well.

This cannot last. It is only because we have older generations with the both the desire and means to acquire such luxuries. Soon there will be nothing but smart phones and the likes of DALL-E.

A friend of mine has recently got into this. He’s a creative commercial photographer and now content creator in London. I don’t know which system he uses but it’s serving him well. The random and yes ‘Amazing’ stuff he’s posting up on FB is completely surreal and almost too good.

I find myself not knowing what to make of it, or even how to respond because it’s just not real. It’s certainly not photography, but we live in a world of not real now all around us. I’ll leave it at that rather than drop into a rabbit hole I can’t climb out of.

I see comments on FB about these images from other friends, ‘Wow what an amazing photo’, I don’t contribute almost as if I’m frozen in time but inside I’m screaming ‘But it’s not actually a photo is it?’. I don’t know where this will end, or where it will leave us photographers. Worse still us photographers using 1950s design over expensive cameras with manual focus lenses. Luckily for me as I get older though, I care much less about these things and try to fill my time as much as possible with things I enjoy no matter how antiquated or less ‘amazing’ they might seem to those who replace me. Didn’t someone once say ‘Keep it real’. I don’t think that actually applies any more. 🙂

“Just to be clear, that computer-hallucinated image did not exist previously and was not patched together from existing images”.

Color me skeptical on that. Anyway, in the Brave New World would one not want pictures of existing places? How about tying the DALL-E system to surveillance cameras all over the world, as in the TV show Person of Interest? Plus stock photo agencies. And such sites as Pinterest. One could ask to have a picture of oneself chatting with King Charles III in front of the stone images on Easter Island. Digitally enhanced.